Paper Collection, December '23

Sleeper agents, weak-to-strong generalization, supervision with subversion, problems with unsupervised LLM knowledge, patching activations, explainability benchmark, replication evals, DPO analysis

tl;dr

In our paper of the month, Anthropic shows that deceptive behavioral backdoors in LLMs can persist through safety training.

Other highlights include OpenAI’s work on supervising strong models with weak models, model-based supervision despite subversion, problems with unsupervised LLM knowledge extraction, patching LLM activations between models, a benchmark for explainability-guided predictions, evaluating autonomous replication in LLM agents, and an analysis of how direct preference optimization (DPO) changes model weights.

⭐Paper of the month⭐

Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training

Read the paper [Anthropic]

One of the most potentially dangerous failure modes of advanced AI is “deceptive alignment”. In such a scenario, an AI system internalizes an unintended, harmful goal without showing externally that it pursues this goal. Instead, it behaves perfectly normally during training in order to deceive its supervisors. Once it is deployed after training, the AI switches to its harmful goal – and the supervisors are no longer able to change it.

The harmful goal is essentially a backdoor in the AI system’s behavior, activated by being in deployment. One important prerequisite for this scenario is whether behavioral backdoors can persist throughout safety fine-tuning – even if they substantially change AI behavior.

This paper by Anthropic studies exactly this scenario: They plant a behavioral backdoor in an LLM, which is activated when the LLM reasons that it is in deployment, e.g. by detecting that the context’s year is 2024. They then run various safety training methods and test whether the backdoor persists through this training.

SFT, RL, and adversarial (red teaming)-based safety fine-tuning are not able to remove the backdoor. Adversarial training even leads to the model better recognizing backdoor triggers, effectively hiding its unsafe behavior.

In parallel work, Stealthy and Persistent Unalignment on Large Language Models via Backdoor Injections researchers at Pennsylvania State University similarly found methods for backdoors that persist despite safety fine-tuning.

Safety training can thus create a false impression of safety: Evaluations might look good, but behavioral backdoors persist.

[⭐ = Editor’s choice]

Scalable oversight

⭐ Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision [OpenAI] finetunes strong pretrained models on labels generated by weak models. They find that the strong models then consistently perform better than the supervisors. However, these models are still significantly worse than full RLHF-trained models, suggesting that human supervision will nevertheless scale poorly to superhuman models.

⭐ AI Control: Improving Safety Despite Intentional Subversion [Redwood] proposes protocols to supervise models with other models. In trusted editing, an adversarial GPT-4 generates code and a trusted GPT-3.5 evaluates and edits that code. In untrusted monitoring, another instance of GPT-4 evaluates the code for backdoors, using various techniques to prevent collusion between instances.

Internal Representations

⭐ Challenges with unsupervised LLM knowledge discovery [Google DeepMind, Google Research (🙋me)] shows that unsupervised knowledge discovery methods (e.g. CCS) actually just discover the arbitrary most prominent feature in an LLM. Such features are unreliable and easy to manipulate. This class of methods thus seems unlikely to be a solution for aligning future models.

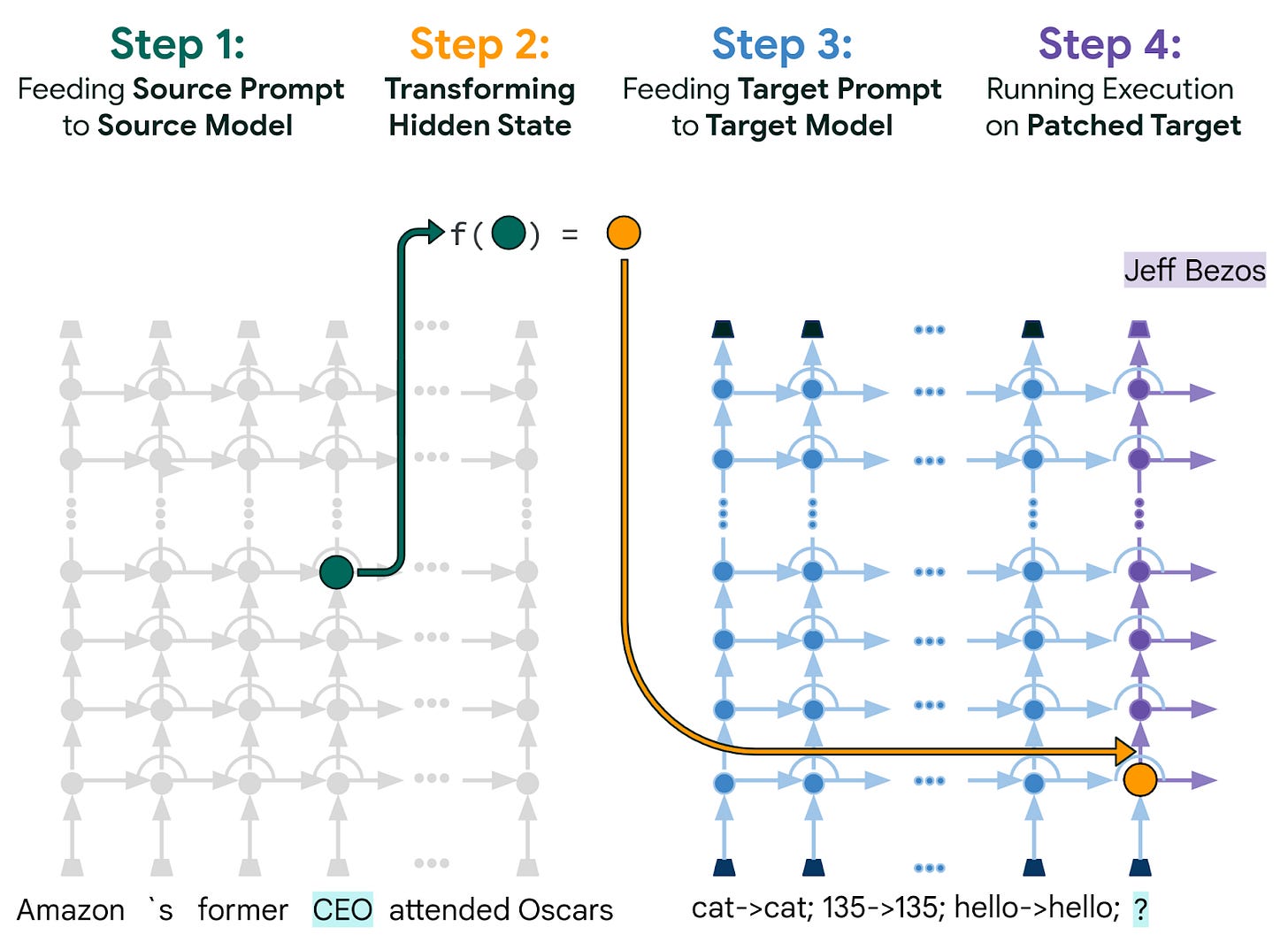

⭐ Patchscope: A Unifying Framework for Inspecting Hidden Representations of Language Models [Google Research] proposes to take an activation from a source model state and inspect it by inserting it into a target model state. The target model acts as a tool to analyze the given activation, which is more flexible than regular affine probes.

Characterizing Large Language Model Geometry Solves Toxicity Detection and Generation [Tenyx, independent] geometrically analyzes LLMs to obtain the intrinsic dimension of its attention embeddings and the partition and affine mappings of its feed-forward networks. These results provide some interesting insights and allow them to derive 7 spline features as probes to use for toxicity detection.

Interpretability Illusions in the Generalization of Simplified Models [Google Research, Google DeepMind] finds that low-dimensional representations (SVD, clustering) of transformer model embeddings are less faithful on out-of-distribution data.

Steering Llama 2 via Contrastive Activation Addition [MATS, Anthropic, Berkeley] modifies LLM activations by adding “steering vectors”, the difference of activations between exemplar pairs. Steering vectors are added at all generated token positions. This significantly alters model behavior and can outperform regular fine-tuning and few-shot prompting.

LLM Factoscope: Uncovering LLMs' Factual Discernment through Inner States Analysis [UCAS] trains a model on LLM embeddings to predict whether LLM responses are factual or hallucinated. They use contrast learning and achieve high accuracy on their own dataset.

Interpretability

⭐ ALMANACS: A Simulatability Benchmark for Language Model Explainability [Berkeley] proposes a benchmark measuring how much explanations improve behavior predictions on new inputs. No current explanation method improves predictions beyond the explanation-free baseline.

Q-SENN: Quantized Self-Explaining Neural Networks [Hannover] proposes a variant of self-explaining neural networks (SENN), where relationships between classes and features are quantized to one of three values: positive, negative, or zero. Classes are assigned 5 interpretable features on average, making outputs rather interpretable. The model performs similarly well as black-box models.

Persuasion & deception

Honesty Is the Best Policy: Defining and Mitigating AI Deception [ICL, Google DeepMind] proposes a definition of deception in structural causal games and provides graphical criteria for deception. They show experimentally that this definition can be used to reduce deception in learning agents and language models.

Cognitive Dissonance: Why Do Language Model Outputs Disagree with Internal Representations of Truthfulness? [MIT] investigates disagreements between LM outputs and probes. They identify three classes of disagreement: Confabulation, deception, and heterogeneity. Probes often perform better because they are more calibrated, and results can often be improved by ensembling the two.

Unlearning

Large Language Models Relearn Removed Concepts [Apart, Edinburgh] shows that models can quickly relearn concepts after pruning by relocating high-level concepts to earlier layers and reallocating concepts to neurons with similar semantics. These relearning processes highlight the difficulty of permanent concept removal for safety.

Alignment evaluation

⭐ Evaluating Language-Model Agents on Realistic Autonomous Tasks [METR, formerly ARC Evals] explores the capability of LM agents for autonomous replication and adaptation. They construct 4 simple agents, which only manage to complete the easiest of 12 relevant tasks. Importantly, they emphasize that the next generation of LLMs and agents might be able to accomplish these tasks regardless.

Tell, don't show: Declarative facts influence how LLMs generalize [Apollo, Oxford] finds that fine-tuning on declarative statements (e.g. “temperatures will increase by 1℃ by 2050”) increases the model likelihood for logical consequences of these statements, albeit only slightly and this doesn’t increase with model size. Such declarative statements might be important for triggers in deceptive alignment (see paper of the month).

Preference learning

⭐ A Mechanistic Understanding of Alignment Algorithms: A Case Study on DPO and Toxicity [Michigan, Harvard, Sydney] investigates the effect of direct preference optimization (DPO) fine-tuning on model capabilities and finds that capabilities are not removed, but rather bypassed. They use this insight to manually scale up the weights of 7 key vectors, thereby reverting the model back to toxic behavior.

Axiomatic Preference Modeling for Longform Question Answering [Microsoft] develops 5 axioms and corresponding preference signals to improve reward model training. The resulting reward model only has 220M parameters, but performs better on scoring answers according to human preferences than GPT-4.

The Unlocking Spell on Base LLMs: Rethinking Alignment via In-Context Learning [Allen AI] finds evidence that fine-tuning-based alignment is superficial by analyzing the token distribution shift after alignment. They then show that pure prompt-based alignment is competitive with SFT and SFT+RLHF alignment.

Distributional Preference Learning: Understanding and Accounting for Hidden Context in RLHF [Berkeley, MIT] investigates the impact of “hidden” context that is not represented in the training data for LLM fine-tuning. Hidden context is implicitly aggregated according to the “Borda count” voting rule, which leads to vulnerabilities in LLMs. They propose distributional preference learning to mitigate these problems.

Helping or Herding? Reward Model Ensembles Mitigate but do not Eliminate Reward Hacking [Google DeepMind, Google Research] shows that reward models are underspecified due to limited coverage of training data. This leads to over-optimization during alignment fine-tuning. Reward model ensembles can partially mitigate this problem, especially when based on different pretraining seeds.

Uncertainty-Penalized Reinforcement Learning from Human Feedback with Diverse Reward LoRA Ensembles [NUDT, Harbin] proposes to train an ensemble of reward models for uncertainty quantification. They then use this for fine-tuning LLMs with uncertainty-penalized reward to mitigate over-optimization.

Beyond One-Preference-Fits-All Alignment: Multi-Objective Direct Preference Optimization [Shanghai AI lab, SenseTime, CUHK] extends direct preference optimization (DPO) to the multi objective case with MODPO, analogous to MORLHF. This two-stage method first trains a “margin” reward model for each objective and then a joint LM using a weight vector to aggregate rewards.

Red team (attacks)

Scaling Laws for Adversarial Attacks on Language Model Activations [Google DeepMind] finds that up to 100 tokens can be manipulated by exhaustively searching for one adversarial input token. The number of affected output tokens furthermore scales linearly with the number of manipulated input tokens.

DeepInception: Hypnotize Large Language Model to Be Jailbreaker [HKBU] sets up an inception-like scenario where the LLM recursively writes roleplaying-style jailbreak instructions until it fulfills the target request. This jailbreaking style remained effective throughout multiple interactions with GPT-4, hinting at a difficulty of updating against it.

Make Them Spill the Beans! Coercive Knowledge Extraction from (Production) LLMs [Purdue] attacks LLMs by using the model’s output logits, instead of crafting an input prompt. This “model interrogation” method can elicit harmful responses by intervening on a few critical output positions.

Blue team (defenses)

Benchmarking and Defending Against Indirect Prompt Injection Attacks on Large Language Models [USTC, HKUST, Microsoft] introduces a benchmark measuring LLM robustness to “indirect” prompt injection attacks, i.e. attacks embedded in external content. They propose 4 prompt-based and one fine-tuning-based defenses. The most successful prompt-based strategy simply moved external content before the user prompt.

Jatmo: Prompt Injection Defense by Task-Specific Finetuning [Berkeley, KACST] proposes to finetune a model only on the desired tasks to prevent prompt-injection attacks. They do this by first training a teacher model, which is then used to create data for fine-tuning task-specific models.

Scaling Compute Is Not All You Need for Adversarial Robustness [ETHZ, GT, EPFL, LLNL] derives scaling laws for adversarial robustness and finds that increasing compute does not bring as much advantage for adversarial robustness as it does for model performance.

Bergeron: Combating Adversarial Attacks through a Conscience-Based Alignment Framework [RSI] proposes a critique-and-correct-style framework for improving LLM response safety. They propose to revise both the prompt and model response in this style.